One of the goals of my Masters Thesis is to make the web’s spatial data resources discoverable from a single portal. It builds on the earlier work of the entire OpenGeoPortal team. Two ideas supporting the thesis are discussed in the following paragraphs. They are: crawling the web for spatial data and parsing and rendering shapefiles in a web browser. It should be noted that during the development of the OGC protocols, the approaches discussed below would not have been possible. The early web held many more limitations. It is only due to recent enhances in browser performance and advances in the HTML5 standard that allow these new approaches to be prototyped and analyzed.

If you would like to talk about or criticize these ideas, you can contact me at Stephen.McDonald@tufts.edu.

Crawling the web for spatial data

Research universities employ OpenGeoPortal (OGP) to make their GIS repositories easily accessible. OGP’s usefulness is partially a function of the data sets it makes available. OGP, like most GIS portals, relies on a somewhat manual ingest process. Metadata must be created, verified and ingested into an OGP instance. This process helps assure very high quality metadata. However this manual nature limits the rate at which data can be ingested. If the goal is to build an index of the world’s spatial resources, a partially manual ingest process will not scale. Instead it must be extremely automated. To achieve this goal, OGP Ingest now supports data discovery via web crawling.

Ingest via small-scale crawling

The easiest way for an organization to make their spatial data available on the web is with zip files. Typically, each zip file contains an individual shp file, an shx file, an xml file with metadata and any other files related to the data layer. For example, the UN’s Office for the Coordination of Humanitarian Affairs put their data on the web in this fashion (http://cod.humanitarianresponse.info/). OGP’s ingest process can automatically harvest these kind of spatial data sites. Via the OGP Ingest administration user interface, an OGP administrator enters the URL of a web site holding zipped shape files. The OGP crawler processes the site searching all its pages for HTML anchor links to zip files. Each individual zip file is analyzed for spatial resources. OGP ingest code iterates over the elements of each zip file to determine if it has a spatial resource. The user specified metadata quality standard is applied to the discovered resources to determine if they should be ingested. This allows an information rich site with potentially thousands of data layers to be completely and easily ingested simply by providing the URL of the site.

OGP’s ingest crawl code is built on Crawler4j. This crawl library follows robots.txt directives. The number of http requests per second is limited so as to not significantly increase the load on the data server. The crawler identifies itself as OpenGeoPortal (Tufts, http://www.OpenGeoPortal.org).

Ingest via large-scale crawling

Using Crawler4j works well for crawling a single site with a few hundred pages. However, the web is an extremely large data store and well beyond the crawl capabilities of a single server. Instead, it must be crawled using a large cluster of collaborating servers. A proof-of-concept system capable of crawling large portions of the web was developed using Hadoop, Amazon Web Services and CommonCrawl.org’s database. It successfully demonstrated the ability to crawl a massive number of web pages for spatial data.

Crawling based ingest versus traditional ingest

Crawling for data and metadata has several advantages over a more traditional ingest strategy. Since crawling often finds both data and metadata, the URL for the actual data is known and it can be used for data preview and downloading. The bounding box and other attributes of the metadata can be verified against the actual data during ingest. A hashcode of the shapefile can be computed and used to identify duplicate data. A hashcode of the different elements in the shapefile can be used to identify derivative data that share a common geometry file. Crawling builds on an extensive technology base. Small scale crawling builds on Crawler4j, large scale crawling can economically leverage large clusters via AWS Electric MapReduce and CommonCrawl.org’s database of web pages. Data providers need only stand up an Apache web server, they can limit crawling of data by maintaining a robots.txt file. Under appropriate circumstances, metadata can be enhanced using information scrapped from HTML pages. The importance of a layer can be estimated in part by the page rank of the layer linking to the zip file and the overall site rank of the data server.

Browser based rendering of spatial data

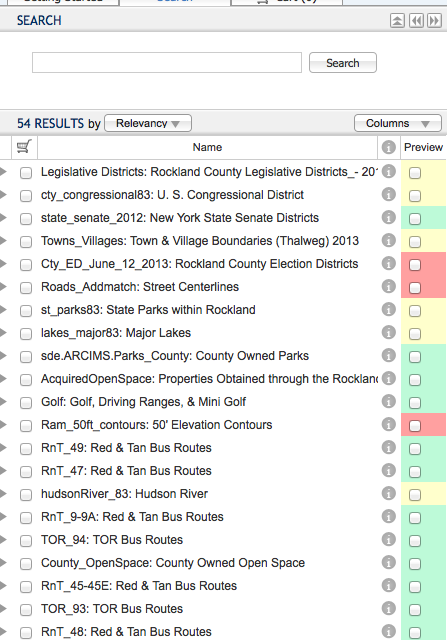

Crawling for spatial data makes it searchable in OGP. However that is not enough. OGP also provides the ability to preview and download data layers. Traditionally, these preview functions are provided by the OGP client code making OGC WMS requests to a map data server like GeoServer. GeoServer processes the shape files and delivers the corresponding requested image tiles. Each organization providing GIS data layers serves them using an instance of an OGC compliant data server (GeoServer) often with some additional supporting spatial data infrastructure (e.g., ESRI SDE or PostGIS). However, data discovered by an OGP crawl resided on an HTTP server and are not known to be accessible via OGC protocols. To make these layers previewable, code was developed to completely parse and render shapefiles in the browser. This works in coordination with a server side proxy. When the user requests a layer preview, the URL for the discovered zip file is retrieved from the repository of ingested layers. OGP browser code makes an ajax request to the OGP server passing the URL of the zip file. The OGP server uses an HTTP GET request to create a local cache copy of the zip file from the original data server. Then it responds to the ajax request with name of the shape file. The client browser then requests the shp and spx files from the OGP server, parses them as binary data and uses OpenLayers to render the result. The parsing is provided by Thomas Lahoda’s ShapeFile JavaScript library. It makes XMLHttpRequests for the shapefile elements and parses the result as a binary data stream. After parsing, a shapefile is rendered by dynamically creating a new OpenLayers layer object and populating it with features. Note that the cache copy on the OGP server is needed because JavaScript code can not make a cross-domain XMLHTTPRequest for a data file.

Advantages of browser based parsing and rendering

Browser based rendering of shapefiles has very different performance characteristics from traditional image tile rendering. This performance has not yet been analyzed. It is anticipated the performance for files over a couple megabytes will be slower because the entire zip file must be copied first to the OGP server acting as a proxy and then on to the client’s web browser. For very large data files, from dozens to hundreds of megabytes, the data transfer time would make the client side parsing and rendering impractical. Naturally, as networks and brwosers get faster, the time for this will decrease. There are a variety of approaches that might improve performance for large files. For example, one could use a persistent cache and create the local cache copy either during the discovery crawl phase or not delete the cache file after an initial request. One could increase performance for especially large shape files by generating a pyramid of shape files at different levels of detail when it is put in the cache and having the client request the appropriate level.

There are several advantages to moving the parsing and rendering of shape files to the client. First, the technology stack of the data server is simplified. Traditionally, an institution serving spatial data might use GeoServer as well as ESRI SDE on top of MS SQL all running on Tomcat and Apache. With client side parsing and rendering, an institution can serve spatial data using only Apache. This simplified server infrastructure leads to reduced operational overhead. The traditional approach requires publishing layers in GeoServer and keeping GeoServer synchronized with the spatial data infrastructure behind it. The client parsing and rendering solution requires only keeping an HTML page with links to the zip files up to date. A second advantage is scalability. The traditional approach requires an application data server to convert the shape files to image tiles. This centralizes a computationally significant task. Whenever the data is needed at a different scale or with different color attributes, OGC requests are created and server load is generated. When all parsing and rendering is done on the client, the data server is simply providing zip files. This allows it to serve many more users. As OGP requires scaling or changing a layer’s color it does not need to contact the server. Since the browser has the entire shape file, it can locally compute any needed transform. For efficiency, these transformations can leverage the client’s GPU. Finally, since any transform it possible it becomes possible to create a fully featured map building application in a browser.

Performance Measurement

Preliminary tests were run using the “Ground Cover Of East Timor” layer from the UN’s Food And Agriculture Organization. The zipped shape file is at http://www.fao.org:80/geonetwork/srv/en/resources.get?id=1285&fname=1285.zip&access=private. This file was used for initial testing because it is small and also easy to find using OGP. During this test, a tomcat server was run on a MacBook Pro laptop running over the Tufts University wireless network in Somerville, MA, USA. A Chrome browser instance connected to the OGP server using localhost. The FAO server is located in Rome, Italy.

Zip file size: 702k

Time to create local cache copy (on localhost): 2.9 to 3.9 seconds

Unzipped shp file size: 920k

Unzipped shx file size: 828bytes

Time to transfer shp and shx files to browser and parse them: .3 seconds

Time to render in OpenLayers: 1.3 seconds

Total time: 4.5 seconds to 5.5 seconds

The following screenshot shows the “Ground Cover Of East Timor” layer previewed in OpenGeoPortal. The Chrome Developer Tools panel at the bottom show the requests made by the browser. The first is an ajax request to create a local copy of the shape file on the OGP server. The server code makes an HTTP GET request to FAO’s server in Rome for the zip file, unzips the contents and returns name(s) of the shapefile(s). The next two requests in the network tab show the XMLHttpRequests calls from Thomas Lahoda’s shapefile library. This demonstrates the client is directly processing shapefiles rather than making WMS requests.

When parsing and rendering spatial data such as shapefiles in the browser, there is an initial time penalty for transferring all the original, raw data to the browser. This initial time is potentially offset by handling all future scaling events within the browser instead of making tile requests over the network. The profile for the potential higher initial load time versus the repeated requests for tiles may change as crawl based ingest makes spatial resources from servers around the globe available in a single portal. In the above example, only a single HTTP GET request was issued to FAO’s Rome based server rather than repeated WMS requests to it.